Architecture enhancements

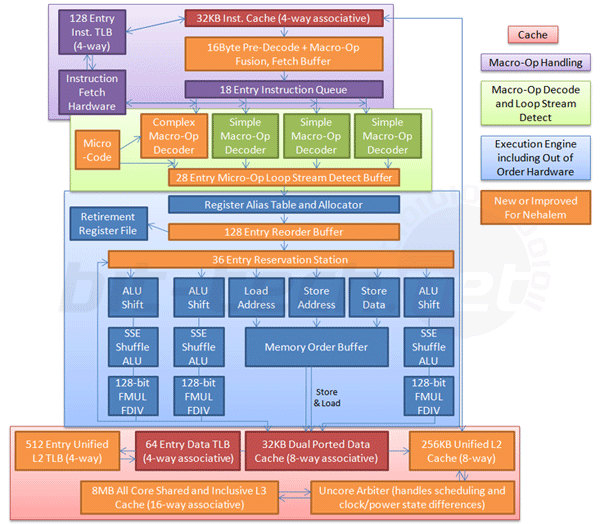

For some parts Nehalem builds off the foundations of Penryn's advanced Core 2 architecture, but in others it's been redesigned to further accommodate the needs of Hyper Threading. Penryn's pipeline was a very nippy 14 stages, while in comparison Nehalem extends this quite considerably to 20-to-24 stages.Branch Prediction improvements have been made yet again - this is particularly important for Hyper Threading and multi-threaded applications that anticipate what data is needed during an execution in order to minimise latency. Nehalem enhancements include an improved L2 Branch Predictor with better accuracy in large code sizes (database applications for example), and an Advanced Renamed Return Stack Buffer (RSB) that removes branch mispredicts on x86 RET (return) instructions.

First level cache and TLBs remain the same at 32KB and 128 Entry (4-way associative) for data and instruction cache, but Nehalem also includes a low latency 2nd level, unified 512 entry unified TLB for Small Page (4k) data and instructions, up from a 256 Entry data-only L2 TLB in Penryn. As applications grow in data size the chances of a TLB miss increases, so the more general L2 TLB helps buffer the first, especially as there is increased competitive thread pressure on the L1 TLB.

While Penryn and Conroe pushed Macro-Op fusion as a performance feature - combining and condensing data before it even hits the Decoders, it was limited to just 32-bit data making it - at best - fractionally useful in 64-bit systems that ran some 32-bit code. Nehalem updates the Macro-Ops fusion engine to finally handle and condense 64-bit Macro-Ops, so while us power users will see some benefit, servers and workstations who have been using the majority 64-bit software for years should see the greatest improvements.

The Loop Stream Detection function in Penryn doubled up as the Instruction Queue hardware, so in effect respectively little extra logic was needed for this performance improvement. With Nehalem though, the LSD has been shifted down the pipeline a stage post Decode, requiring its own Entry Buffer hardware.

Previously, the LSD would store and reissue up to 18 instructions to the decoder, freeing up the Fetch and Branch Prediction hardware to either power down or do other things. In Nehalem the single Macro-Op goes through the decoder then it gets stored in a larger 28 Micro-Op buffer with Loop Stream Detection, to re-issue the already decoded data if necessary - this saves a re-issue stage in the pipeline making it faster, even if it does extend the overall pipeline. In addition it either allows that extra stage to be shut down or used in other things, basically making it more efficient all round.

Next, we dive into the Execution Engine and the Out of Order executor, which have been beefed up in places to further accommodate Hyper Threading's competitive demands on hardware, while also improving performance in general. As the decoded Micro-Ops enter the Execution Engine they meet a new 28 Micro-Op buffer, compared to the seven (approx.) on Core 2 - this extra width is important because the engine can still only churn through four Micro-Ops a stage, just like Penryn, so storing more from extra thread demands is necessary.

The Reorder Buffer has been made a third larger - up from 96 to 128 Entries, and the Reservation Station (which schedules operations to available Execution Units) has been given an extra four slots allowing 36 Entries also. The actual executing logic remains the same and the Dynamic four-wide execution, 128-bit wide SSE "Advanced Digital Media Boost" and Super Shuffle Engine has been directly migrated over from Penryn. This can execute up to six operations per clock cycle - three Memory Ops (a load, a store data and a store address) and three computational operations.

Since a single thread will rarely do all six operations at once, an interpretation of this could insinuate that Penryn's use of this Execution Engine was inefficient to some degree. With this in mind, Intel expects Nehalem to handle "twice as much" with mostly the same hardware with a little bit more width than what's come before.

Finally, Nehalem has also considerably increased the number of load and store buffers from 32/20 to 48/32 respectively to handle the extra data needed to potentially satisfy both threads being executed.

Overall, there are some efficiency improvements but most of Nehalem has been built to accommodate the pressures of a dual-thread environment through the pipeline. Extending it was inevitable, and while likely slightly longer than Intel's old Northwood processor that also introduced SMT, the far lower latency and more efficient memory access than Netburst ever offered means that Nehalem should remain relatively efficient.

Effectively, the Nehalem architecture is Intel's attempt to get the best out of both its Hillsboro and Haifa design teams' ideologies. It has moulded a power efficient pipeline originating from P6-Pentium M-Core-Core 2 era with the resource efficient width that Pentium 4 later tried to achieve. It does this without the excessive depth of Netburst, though.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.